Docker: From Beginner to Expert - A Comprehensive Guide

Master Docker through practical examples, real-world case studies, code snippets, and in-depth topics

Table of contents

- Introduction

- Docker Fundamentals

- Setting up Docker on Your Machine

- Creating and Managing Docker Containers and Images

- Dockerfile, docker-compose, and Networking

- Docker Internals

- Docker Volumes and Data Persistence

- Optimizing Docker Images

- Docker Security Practices

- Container Orchestration with Docker Swarm and Kubernetes

- Real-world Case Studies and Best Practices

- A Sample Java Project to Demonstrate Docker Capabilities

- CI/CD Pipeline with Jenkins and AWS ECR

- Conclusion

Introduction

Docker is a powerful and widely-used platform that simplifies the development, deployment, and management of applications by utilizing containerization technology. In this comprehensive guide, we will walk you through everything you need to know about Docker, from basic concepts to advanced techniques. By the end of this blog, you will have the knowledge and confidence to use Docker like an expert.

Docker Fundamentals

What is Docker?

Docker is an open-source platform that automates the deployment, scaling, and management of applications in lightweight, portable containers. Containers are isolated environments that contain everything an application needs to run, including the code, runtime, libraries, and system tools. This makes it easy to build, share, and deploy applications consistently across various environments and platforms.

Why Use Docker?

Docker offers several benefits:

Consistency: Applications run the same way in every environment, reducing "it works on my machine" issues.

Portability: Containers can be easily moved between different systems and cloud providers.

Scalability: Containers can be quickly started, stopped, and scaled up or down to meet demand.

Isolation: Containers are isolated from each other and the host system, improving security and resource management.

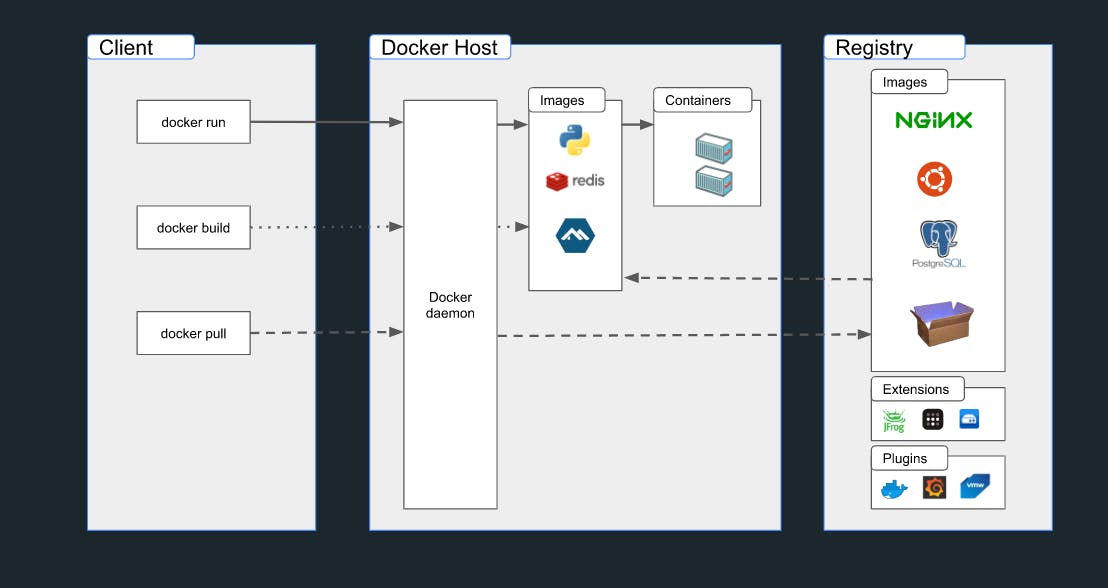

Docker Architecture

Docker uses a client-server architecture, consisting of the following components:

Docker Client: The user interface that communicates with the Docker Daemon through CLI commands or REST API calls.

Docker Daemon: The background service responsible for building, running, and managing containers. It listens for requests from the Docker Client and processes them.

Docker Images: Read-only templates used to create containers. They are stored in Docker registries, such as Docker Hub or private registries.

Docker Containers: Isolated environments created from Docker images to run applications and their dependencies.

Docker Registries: Centralized services for storing and distributing Docker images.

Setting up Docker on Your Machine

To get started with Docker, you will need to install Docker Desktop for your operating system (Windows, macOS, or Linux). Follow the official installation guide here.

After the installation is complete, open a terminal or command prompt, and run the following command to check if Docker is working correctly:

docker --version

You should see the Docker version number as the output.

Creating and Managing Docker Containers and Images

Docker uses images as the basis for containers. Images are lightweight, portable, and versioned snapshots of an application and its dependencies. Containers are created from these images and run as isolated instances of the application.

Creating a Docker Image

To create a Docker image, you need a Dockerfile. A Dockerfile is a script that contains instructions for building an image. Let's create a simple Dockerfile for a Python application:

# Use the official Python base image

FROM python:3.8

# Set the working directory

WORKDIR /app

# Copy requirements file into the container

COPY requirements.txt .

# Install dependencies

RUN pip install --no-cache-dir -r requirements.txt

# Copy the application code into the container

COPY . .

# Expose the application port

EXPOSE 8080

# Run the application

CMD ["python", "app.py"]

To build the Docker image, navigate to the directory containing the Dockerfile and run:

docker build -t my-python-app .

Running a Docker Container

To run a Docker container from the created image, use the following command:

docker run -d -p 8080:8080 --name my-python-app-instance my-python-app

This command will create and run a container named my-python-app-instance using the my-python-app image. The -d flag runs the container in detached mode, while -p 8080:8080 maps the host's port 8080 to the container's port 8080.

Listing Docker Containers

To list all running containers, use the docker ps command:

docker ps

To list all containers, including the stopped ones, add the -a flag:

docker ps -a

Stopping and Removing Docker Containers

To stop a running container, use the docker stop command:

docker stop my-python-app-instance

To remove a stopped container, use the docker rm command:

docker rm my-python-app-instance

Dockerfile, docker-compose, and Networking

Dockerfile Best Practices

A well-structured Dockerfile can improve the efficiency and maintainability of your Docker images. Some best practices include:

Using official base images.

Grouping related instructions, such as installing dependencies or copying files.

Utilizing multi-stage builds to minimize image size.

Properly ordering instructions to leverage Docker layer caching.

Docker Compose

Docker Compose is a tool for defining and running multi-container Docker applications. It uses a YAML file (docker-compose.yml) to specify the services, networks, and volumes for your application. This makes it easy to manage and deploy complex applications with multiple containers.

Here's an example docker-compose.yml file for a simple web application with a frontend, backend, and database:

version: '3.8'

services:

frontend:

build: ./frontend

ports:

- '80:80'

backend:

build: ./backend

ports:

- '8080:8080'

db:

image: 'postgres:latest'

environment:

POSTGRES_USER: 'user'

POSTGRES_PASSWORD: 'password'

To start the application, run docker-compose up in the directory containing the docker-compose.yml file.

Docker Networking

Docker creates a default network for containers to communicate with each other. You can also create custom networks and assign containers to them, providing greater control over container communication and isolation. Use the following command to create a custom network:

docker network create my-custom-network

To connect a container to a network, use the --network flag when running the container:

docker run -d --network my-custom-network --name my-container my-image

Scenario 1: Creating a User-defined Bridge Network

In this scenario, we'll create a user-defined bridge network and connect two containers to that network, enabling communication between them.

- Create a user-defined bridge network:

docker network create my-bridge-network

- Start two containers connected to the

my-bridge-network:

docker run -d --name container-1 --network my-bridge-network my-image

docker run -d --name container-2 --network my-bridge-network my-image

Now, container-1 and container-2 can communicate with each other using their container names as hostnames. For example, if both containers are running web servers, you can use curl to send an HTTP request from container-1 to container-2:

docker exec -it container-1 curl http://container-2

Scenario 2: Exposing Container Ports to the Host

In this scenario, we'll expose a container's port to the host system, allowing external access to a service running inside the container.

- Start a container with port 8080 exposed and mapped to port 80 on the host system:

docker run -d --name my-web-server -p 80:8080 my-web-server-image

Now, the web server running inside my-web-server container is accessible on the host system at http://localhost:80.

Scenario 3: Connecting Containers across Different Networks

In this scenario, we'll connect two containers that are on different networks, allowing communication between them.

- Create two user-defined bridge networks:

docker network create network-1

docker network create network-2

- Start two containers, each connected to a different network:

docker run -d --name container-1 --network network-1 my-image

docker run -d --name container-2 --network network-2 my-image

- Connect

container-1tonetwork-2:

docker network connect network-2 container-1

Now, container-1 and container-2 can communicate with each other using their container names as hostnames, even though they are on different networks.

Docker Internals

Docker Storage Drivers

Docker uses storage drivers to manage the filesystem layers of images and containers. Different storage drivers are available, such as Overlay2, AUFS, Btrfs, and ZFS. The choice of storage driver depends on the host's operating system and requirements, such as performance and compatibility. For more information on storage drivers, refer to the official Docker documentation.

Logging and Monitoring

Docker provides built-in logging and monitoring capabilities for containers. By default, Docker captures the standard output and standard error streams of a container and stores them as logs. You can view the logs using the docker logs command:

docker logs my-container

For more advanced monitoring, you can use tools like Prometheus and Grafana to collect and visualize container metrics. For centralized logging, consider using services like ELK Stack (Elasticsearch, Logstash, and Kibana) or Datadog to aggregate and analyze logs from multiple containers.

Docker Volumes and Data Persistence

Docker containers are ephemeral by nature, meaning any data created inside a container is lost when the container is removed. To persist data and share it between containers or with the host system, Docker provides volumes.

Docker Volume Types

There are three types of Docker volumes:

Host Volumes: These volumes map a directory on the host system to a directory inside the container. Host volumes are useful for sharing data between the host and container or between multiple containers.

Anonymous Volumes: These volumes are created automatically when a container is started and are not given an explicit name. Anonymous volumes are useful for temporarily storing data that is not required after the container is stopped.

Named Volumes: These volumes are created explicitly with a specific name and can be easily referenced and managed. Named volumes are useful for sharing data between containers or persisting data across container restarts.

Creating and Managing Docker Volumes

To create a named volume, use the docker volume create command:

docker volume create my-volume

To list all volumes on your system, use the docker volume ls command:

docker volume ls

To remove a volume, use the docker volume rm command:

docker volume rm my-volume

Using Volumes with Docker Containers

To use a volume with a Docker container, use the --mount or -v flag when running the container:

docker run -d --name my-container --mount source=my-volume,target=/app/data my-image

or

docker run -d --name my-container -v my-volume:/app/data my-image

This command will create and run a container named my-container using the my-image image, and mount the named volume my-volume to the /app/data directory inside the container.

For more details and examples about docker volumes, please refer to the blog - https://spacelift.io/blog/docker-volumes

Optimizing Docker Images

Optimizing Docker images is crucial for reducing build times, improving startup times, and minimizing the attack surface. Here are some tips for optimizing your Docker images:

Use Multi-stage Builds

Multi-stage builds allow you to use multiple FROM statements in a single Dockerfile. This helps to reduce the final image size by only including the necessary files and dependencies for your application.

# First stage: Build the application

FROM node:14 AS build

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

# Second stage: Create the final image

FROM node:14-alpine

WORKDIR /app

COPY --from=build /app/dist /app/dist

COPY package*.json ./

RUN npm install --production

CMD ["npm", "start"]

Minimize the Number of Layers

Each command in your Dockerfile creates a new layer in the image. To reduce the number of layers, try to combine multiple commands into a single RUN command using the && operator:

# Before

RUN apt-get update

RUN apt-get install -y curl

RUN apt-get clean

# After

RUN apt-get update && \

apt-get install -y curl && \

apt-get clean

Remove Unnecessary Files and Dependencies

Only include the files and dependencies required to run your application. Use a .dockerignore file to exclude unnecessary files and directories from the build context:

.git

node_modules

*.log

Also, remove any temporary files or caches created during the build process using a single RUN command:

RUN apt-get update && \

apt-get install -y curl && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

Use Official Base Images

When possible, use official base images provided by the maintainers of the programming language or framework you are using. These images are often optimized for size and performance and receive regular updates.

Docker Security Practices

Securing your Docker environment is crucial to protect your applications and infrastructure. Here are some essential Docker security practices:

Keep Your Docker Images Up to Date

Regularly update your base images and dependencies to ensure that you're using the latest security patches. You can use tools like Dependabot or Renovate to automate this process.

Use Minimal Base Images

When possible, use minimal base images like Alpine Linux that include only the necessary components for your application. This reduces the attack surface and minimizes potential vulnerabilities.

Scan Images for Vulnerabilities

Scan your Docker images for vulnerabilities using tools like Trivy, Snyk, or Clair. Integrate these tools into your CI/CD pipeline to ensure that your images are always secure.

Implement Least-Privilege Principles

Avoid running containers as the root user and use the least-privileged user necessary to run your application. This can be achieved by specifying a non-root user in your Dockerfile using the USER directive:

USER 1001

Limit Resource Usage

Limit the resources (CPU, memory, disk I/O, etc.) available to your containers to prevent resource exhaustion attacks. You can set resource limits when running a container using flags like --memory, --cpu-shares, and --blkio-weight:

docker run -d --memory 256M --cpu-shares 512 --blkio-weight 500 my-image

Use Secure Communication

When possible, use secure communication methods like HTTPS, TLS, or SSH to encrypt communication between containers and external services. This helps protect sensitive data from being intercepted or tampered with.

Monitor and Audit Your Docker Environment

Monitor your Docker environment for unusual activity, such as unauthorized access, resource usage spikes, or new vulnerabilities. Use tools like Falco or Docker Bench for Security to audit your environment and ensure that you're following best practices.

By implementing these security practices, you can minimize potential risks and protect your Docker environment.

Container Orchestration with Docker Swarm and Kubernetes

Container orchestration tools like Docker Swarm and Kubernetes help manage, scale, and maintain containerized applications across multiple hosts.

Docker Swarm

Docker Swarm is a native clustering and orchestration tool for Docker. It turns a group of Docker nodes into a single, virtual Docker host. Some key features of Docker Swarm include:

Service discovery

Load balancing

Rolling updates and rollbacks

Scaling and replication

Kubernetes

Kubernetes is a powerful container orchestration platform developed by Google. It offers a robust set of features for managing containerized applications, including:

Automatic scaling

Self-healing

Load balancing and service discovery

Storage orchestration

For a detailed comparison of Docker Swarm and Kubernetes, check out this article.

Real-world Case Studies and Best Practices

Case Study: Spotify

Spotify, a leading music streaming service, adopted Docker to streamline their development process and improve resource utilization. By using Docker, Spotify reduced build times, increased development velocity, and improved resource usage across their infrastructure. Read more about Spotify's experience with Docker here.

Case Study: PayPal

PayPal, a global online payment platform, transitioned to Docker and a microservices architecture to increase scalability, improve release cycles, and optimize resources. Docker played a crucial role in enabling PayPal to break down their monolithic application into smaller, more manageable services. Learn more about PayPal's Docker adoption here.

Best Practices

Use a container-centric CI/CD pipeline to automate the build, test, and deployment of containerized applications.

Monitor and log container performance and resource usage to identify bottlenecks and optimize resource allocation.

Implement security best practices, such as scanning images for vulnerabilities, using least-privilege principles, and isolating containers with custom networks.

A Sample Java Project to Demonstrate Docker Capabilities

In this section, we will create a simple Java web application using the Spring Boot framework and package it with Docker. You will need Java and Maven installed on your machine to follow along.

Create a new Spring Boot project using Spring Initializr. Select the "Web" dependency and download the project as a ZIP file.

Extract the ZIP file and navigate to the project directory in your terminal or command prompt.

Build the project using Maven:

mvn clean installCreate a

Dockerfilein the project directory with the following contents:# Use the official OpenJDK base image FROM openjdk:11 # Set the working directory WORKDIR /app # Copy the JAR file into the container COPY target/my-java-app-*.jar my-java-app.jar # Expose the application port EXPOSE 8080 # Run the application CMD ["java", "-jar", "my-java-app.jar"]Build the Docker image:

docker build -t my-java-app .Run the Docker container:

docker run -d -p 8080:8080 --name my-java-app-instance my-java-appAccess the application in your browser by navigating to

http://localhost:8080.This example demonstrates how Docker can be used to package and deploy a Java web application consistently across different environments.

This example demonstrates how Docker can be used to package and deploy a Java web application consistently across different environments.

CI/CD Pipeline with Jenkins and AWS ECR

Integrating Docker with a CI/CD pipeline is essential for automating the build, test, and deployment processes of containerized applications. In this section, we'll cover the basics of using Jenkins for CI/CD and AWS ECR as a container registry.

Container Registry

A container registry is a service that stores and manages Docker images. It allows you to push and pull images to and from the registry, making it easy to share and distribute containerized applications. AWS Elastic Container Registry (ECR) is a fully-managed Docker container registry provided by Amazon Web Services (AWS).

Setting up AWS ECR

Create an AWS ECR repository by navigating to the ECR service in the AWS Management Console and clicking "Create repository".

Set the repository name and configure the required settings.

After creating the repository, note down the repository URL (e.g.,

123456789012.dkr.ecr.us-west-2.amazonaws.com/my-repo).

Integrating Jenkins with Docker and AWS ECR

To set up a Jenkins pipeline that builds a Docker image and pushes it to AWS ECR, follow these steps:

Install the necessary Jenkins plugins, such as "Docker Pipeline" and "Amazon ECR".

Configure your Jenkins credentials with your AWS access key and secret key. This allows Jenkins to authenticate with AWS ECR.

Create a new Jenkins pipeline job.

In the pipeline script, include the following steps:

a. Check out the source code from your version control system (e.g., Git).

b. Build the Docker image using the

docker.build()function:def app = docker.build("my-image:${env.BUILD_ID}")c. Log in to AWS ECR using the

docker.withRegistry()function and your ECR repository URL:docker.withRegistry('https://123456789012.dkr.ecr.us-west-2.amazonaws.com', 'ecr:us-west-2:aws-credentials-id') { // Your Docker push command here }d. Push the Docker image to the ECR repository:

app.push("latest") app.push("${env.BUILD_ID}")

With this Jenkins pipeline, every time you commit changes to your source code, Jenkins will automatically build a new Docker image and push it to your AWS ECR repository.

By integrating Jenkins and AWS ECR into your CI/CD pipeline, you can automate the process of building, testing, and deploying your containerized applications, improving the efficiency and reliability of your software development lifecycle.

Conclusion

Docker is a powerful platform that simplifies the development, deployment, and management of applications through containerization. This comprehensive guide has provided an overview of Docker's core concepts, tools, and best practices, as well as a sample Java project to demonstrate its capabilities. With this knowledge in hand, you are well-equipped to leverage Docker in your own projects and become a true Docker expert.