Mastering Kafka for Microservices: A Comprehensive Guide

Unlock the Potential of Event-Driven Architectures with Kafka

Table of contents

- Introduction

- What is Kafka?

- Microservices Architecture

- Key Features of Kafka

- Scalability

- Fault Tolerance

- High Throughput

- Low Latency

- Durability

- Security

- Kafka Fundamentals

- Kafka Architecture

- Producers and Consumers

- Broker, Topics, and Partitions

- Schema Management

- Kafka Protocols

- Kafka Connect

- Kafka Streams

- Microservice Architecture and Kafka

- Event-Driven Architecture

- Advantages of Using Kafka in Microservices

- Installation Guide

- Installing Kafka

- Running Kafka Locally

- Kafka Use Cases

- Case Studies

- Industries Utilizing Kafka

- Sample Project: Real-Time Order Processing System

- Kafka Topics

- How to create Kafka Topics?

- Command Model

- Choreography

- Java Producer: Order Service

- Java Consumer: Inventory Service

- Java Consumer: Shipping Service

- Running the Sample Project

- Extending the Sample Project: Real-Time Order Tracking and Analytics

- Kafka Topics

- Java Producer: Order Status Updates

- Java Consumer: Analytics Service

- Running the Extended Sample Project

- Example: Kafka Connect and JDBC Sink Connector

- Conclusion

Introduction

What is Kafka?

Apache Kafka is a distributed streaming platform that enables you to build real-time data pipelines and streaming applications. Originally developed at LinkedIn, Kafka has grown in popularity due to its high throughput, low latency, fault tolerance, and scalability. It provides a publish-subscribe model, where producers send messages to topics, and consumers read messages from those topics.

Microservices Architecture

Microservices architecture is a software design pattern that structures applications as a collection of small, loosely-coupled services. Each service is responsible for a specific piece of functionality and can be developed, deployed, and scaled independently. This architectural style promotes flexibility, resilience, and the ability to adapt to changing requirements.

Key Features of Kafka

Scalability

Kafka is designed to be highly scalable, allowing you to process large volumes of data and handle increasing workloads effortlessly. You can scale Kafka both horizontally (by adding more brokers to a cluster) and vertically (by increasing the resources of existing brokers).

Fault Tolerance

Kafka is fault-tolerant, ensuring that your data remains available even in the face of failures. It achieves this by replicating messages across multiple brokers in a cluster. If one broker goes down, another broker with a replica of the data can continue serving the messages.

High Throughput

Kafka is optimized for high throughput, allowing you to process a large number of messages per second. This is achieved through efficient message storage, batching, and compression techniques.

Low Latency

Kafka provides low-latency message delivery, which is critical for real-time data processing and analytics. It achieves this by using a distributed architecture and allowing consumers to read messages as soon as they are written to a topic.

Durability

Durability in Kafka refers to the ability of the system to store and persist messages even in the face of failures. By replicating messages across multiple brokers, Kafka ensures that messages can be retrieved even if a broker goes down.

Security

Kafka supports various security features to protect your data and infrastructure. It offers SSL/TLS for encrypting communication between clients and brokers, as well as SASL for authentication. You can also use ACLs (Access Control Lists) to define fine-grained permissions for producers, consumers, and administrative operations.

Kafka Fundamentals

Kafka Architecture

Kafka's architecture consists of several components, including producers, consumers, brokers, topics, and partitions. Producers send messages to topics, while consumers read messages from topics. Brokers store and manage the messages, ensuring their availability to consumers. Topics are divided into partitions, which enable parallelism and scalability.

Producers and Consumers

Producers are applications that generate and send messages to Kafka topics. They are responsible for serializing messages and assigning them to the appropriate topic partitions. Consumers are applications that read and process messages from Kafka topics. They can subscribe to one or more topics and deserialize the messages before processing them.

Broker, Topics, and Partitions

A broker is a Kafka server that stores and manages messages. It's responsible for receiving messages from producers, storing them on disk, and forwarding them to consumers. Topics are logical channels for categorizing messages, and partitions divide topics into smaller, ordered units for parallel processing and increased scalability.

Schema Management

A schema is a blueprint for the structure of data within a message. It defines the data fields and their data types. In Kafka, using a schema registry helps maintain consistency, compatibility, and evolution of the message schemas across producers and consumers. Confluent's Schema Registry is a popular choice for managing Avro schemas in Kafka.

Kafka Protocols

Kafka uses a custom binary protocol for communication between clients and brokers. This protocol is optimized for efficiency and performance, ensuring low-latency message delivery and high throughput. The protocol supports various API requests and responses, such as producing and consuming messages, fetching metadata, and managing group coordination for consumer groups.

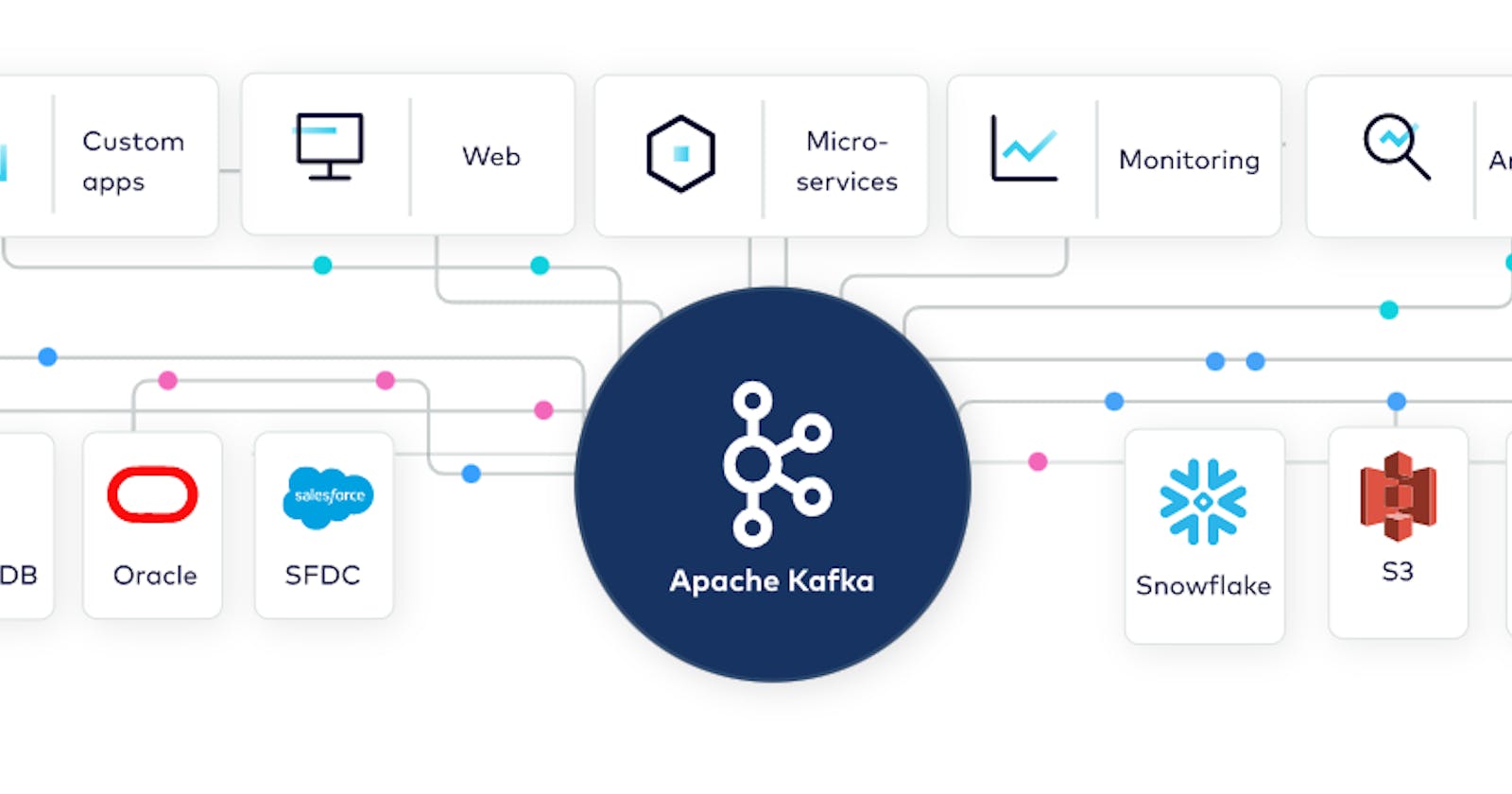

Kafka Connect

Kafka Connect is a framework for connecting Kafka with external systems, such as databases, search engines, and data warehouses. It allows you to import and export data between Kafka and these systems in a scalable and fault-tolerant manner. By leveraging Kafka Connect, you can easily integrate various systems in your microservices architecture.

Kafka Streams

Kafka Streams is a library for building stream-processing applications using Kafka. It provides a high-level API for performing complex transformations and aggregations on data streams in real time. By utilizing Kafka Streams, you can implement powerful stream processing logic within your microservices, which can help you derive insights from data, enrich data, or perform real-time analytics.

With a solid understanding of Kafka's key features and fundamentals, you can begin to explore how Kafka fits into the microservices architecture and how it can be leveraged to build robust, scalable applications.

Microservice Architecture and Kafka

Event-Driven Architecture

Event-Driven Architecture (EDA) is a design paradigm where components of a system communicate through asynchronous events. This approach promotes loose coupling, as components don't need to be aware of each other's existence. Kafka is an ideal choice for implementing EDA in microservices, as it provides a highly-scalable, fault-tolerant, and low-latency platform for sending and receiving events.

Advantages of Using Kafka in Microservices

There are several advantages to using Kafka in a microservices architecture, including:

Decoupling: Kafka's publish-subscribe model allows producers and consumers to communicate without directly depending on each other, promoting loose coupling and reducing the risk of cascading failures.

Scalability: Kafka's horizontal scalability enables you to handle increasing workloads and data volumes in your microservices, ensuring your system can grow to meet demand.

Resilience: Kafka's fault tolerance and replication features ensure that your system can continue operating even in the face of broker failures, promoting overall system resilience.

Real-time processing: Kafka's low latency enables real-time processing of data, which is essential for many microservices use cases, such as analytics, fraud detection, and personalization.

Installation Guide

Installing Kafka

Local System

To install Kafka on your system, follow these steps:

Download the latest Kafka release from the official website.

Extract the downloaded archive.

Install Java (Kafka requires Java to run).

Configure Kafka's properties (such as

zokeeper.connectandbroker.id).Start the Zookeeper and Kafka servers.

For detailed installation instructions, consult the official documentation.

AWS Managed Streaming for Kafka (Amazon MSK)

Amazon MSK is a fully managed Kafka service that simplifies the deployment, scaling, and management of Kafka clusters. It is compatible with the existing Kafka APIs and tooling, allowing you to leverage your existing skills and knowledge.

To get started with Amazon MSK, follow these steps:

Sign up for an AWS account if you don't have one.

Navigate to the Amazon MSK console.

Create a Kafka cluster and configure the necessary settings.

Launch and connect your producers and consumers to the Kafka cluster.

For detailed installation instructions, consult the official documentation.

Running Kafka Locally

To run Kafka locally, follow these steps:

Open a terminal window and navigate to the directory where you extracted Kafka.

Start the Zookeeper server by running the following command:

bin/zookeeper-server-start.sh config/zookeeper.propertiesOpen another terminal window and start the Kafka broker by running the following command:

bin/kafka-server-start.sh config/server.properties

With Kafka installed and running, you can now proceed to explore its capabilities and integrate it into your microservices architecture.

Kafka Use Cases

Kafka can be applied to a wide range of scenarios, including:

Message queues: Kafka can replace traditional message queues like RabbitMQ and ActiveMQ.

Event sourcing: Kafka supports event sourcing patterns, enabling services to store and process historical data.

Log aggregation: Kafka can collect and process logs from multiple sources, facilitating monitoring and analysis.

Stream processing: Kafka can be used with stream processing frameworks like Apache Flink or Kafka Streams to analyze and process data in real time.

Case Studies

LinkedIn: Kafka was originally developed at LinkedIn to handle large-scale data processing needs. It is now used for various use cases, including tracking user activities and serving as the backbone for LinkedIn's real-time analytics infrastructure.

Netflix: Netflix uses Kafka for event-driven microservices, log aggregation, and real-time analytics, enabling them to provide personalized content recommendations and monitor system health.

Uber: Uber leverages Kafka to process massive volumes of event data, powering their real-time data pipeline, which enables efficient driver dispatching, ETA calculations, and fraud detection.

Industries Utilizing Kafka

Kafka has become popular across numerous industries, including:

Finance: Banks and financial institutions use Kafka for real-time fraud detection, risk analysis, and transaction processing.

Telecommunications: Telecom companies employ Kafka for network monitoring, log aggregation, and real-time analytics to optimize network performance and customer experience.

Healthcare: Kafka is used in healthcare for real-time patient monitoring, data analysis, and integration between systems and devices.

Retail: Retailers leverage Kafka for inventory management, real-time pricing updates, and personalized marketing campaigns.

Sample Project: Real-Time Order Processing System

In this section, we will walk through a use case involving a real-time order processing system for an e-commerce platform. The system will handle the following tasks:

Accept new orders from customers.

Notify the inventory system to update stock levels.

Inform the shipping system to prepare the order for delivery.

We will implement this system using Kafka as the message broker and Java for the application code.

Kafka Topics

To connect with Amazon MSK, consult the official documentation.

We will create three topics to handle the different stages of order processing:

orders: This topic will store new orders placed by customers.inventory-updates: This topic will store inventory updates triggered by new orders.shipping-requests: This topic will store shipping requests generated after inventory updates.

How to create Kafka Topics?

The kafka-topics.sh script is a command-line utility for managing topics in a Kafka cluster. You can use this script to create, list, alter, and delete topics. In our sample project, we used five topics. Here's how you can create these topics using the kafka-topics.sh script.

To create a new topic, use the following command syntax:

bin/kafka-topics.sh --create --bootstrap-server <bootstrap-server> --replication-factor <replication-factor> --partitions <num-partitions> --topic <topic-name>

Replace <bootstrap-server>, <replication-factor>, <num-partitions>, and <topic-name> with appropriate values for your Kafka cluster and topic configuration.

For our sample project, assuming your Kafka broker is running on localhost:9092, you can create the required topics with the following commands:

# Create 'orders' topic

bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 3 --topic orders

# Create 'inventory-updates' topic

bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 3 --topic inventory-updates

# Create 'shipping-requests' topic

bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 3 --topic shipping-requests

# Create 'order-status-updates' topic

bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 3 --topic order-status-updates

# Create 'order-analytics' topic

bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 3 --topic order-analytics

These commands create five topics with a replication factor of 1 and three partitions each. You can adjust the replication factor and the number of partitions according to your specific requirements and Kafka cluster setup. Note that in a production environment, it's recommended to set the replication factor to a value greater than 1 for fault tolerance and high availability.

Command Model

A command model is an approach to handling actions in a distributed system, where commands represent actions that need to be performed. In our e-commerce order management system, we can implement the command model by sending commands as events in Kafka. This way, services can produce and consume command events to perform specific actions related to order processing.

For example, consider the following command events in our order management system:

Create Order

Update Order Status

Add Item to Order

Remove Item from Order

Process Payment

These commands can be sent as events to corresponding Kafka topics, and the services responsible for handling these actions can consume these events and perform the required tasks.

Choreography

Choreography is a decentralized approach to managing interactions between services in a distributed system. Instead of relying on a central orchestrator, services react to events produced by other services and perform their tasks accordingly. In the context of our e-commerce order management system, we can implement choreography using Kafka by allowing services to produce and consume events related to order processing.

For example, when an order is created, the Order Service can produce an OrderCreated event and publish it to a Kafka topic. Other services that need to react to this event, such as the Inventory Service or the Payment Service, can consume the OrderCreated event and perform their tasks, like reserving the items in the inventory or initiating the payment process.

By implementing choreography, we can create a more decentralized and flexible system, where services can react to events and collaborate without relying on a central orchestrator. This approach can help improve the maintainability and scalability of the order management system, as adding or modifying services becomes less complex.

Using the command model and choreography, our e-commerce order management system can efficiently process orders in a distributed and scalable manner. Leveraging Kafka as the backbone for event communication ensures that the system is fault-tolerant, resilient, and capable of handling high volumes of events.

Java Producer: Order Service

The Order Service is responsible for accepting new orders and publishing them to the orders topic. Here's a sample implementation:

public class OrderService {

private static final String ORDERS_TOPIC = "orders";

private final KafkaProducer<String, Order> producer;

public OrderService(Properties producerProps) {

producerProps.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

producerProps.put("value.serializer", "io.confluent.kafka.serializers.KafkaJsonSerializer");

this.producer = new KafkaProducer<>(producerProps);

}

public void processOrder(Order order) {

producer.send(new ProducerRecord<>(ORDERS_TOPIC, order.getId(), order));

}

}

Java Consumer: Inventory Service

The Inventory Service will consume messages from the orders topic, update the inventory, and publish inventory updates to the inventory-updates topic:

public class InventoryService {

private static final String ORDERS_TOPIC = "orders";

private static final String INVENTORY_UPDATES_TOPIC = "inventory-updates";

private final KafkaConsumer<String, Order> consumer;

private final KafkaProducer<String, InventoryUpdate> producer;

public InventoryService(Properties consumerProps, Properties producerProps) {

consumerProps.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

consumerProps.put("value.deserializer", "io.confluent.kafka.serializers.KafkaJsonDeserializer");

consumerProps.put("specific.avro.reader", "true");

this.consumer = new KafkaConsumer<>(consumerProps);

this.consumer.subscribe(Collections.singletonList(ORDERS_TOPIC));

producerProps.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

producerProps.put("value.serializer", "io.confluent.kafka.serializers.KafkaJsonSerializer");

this.producer = new KafkaProducer<>(producerProps);

}

public void run() {

while (true) {

ConsumerRecords<String, Order> records = consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, Order> record : records) {

InventoryUpdate inventoryUpdate = updateInventory(record.value());

producer.send(new ProducerRecord<>(INVENTORY_UPDATES_TOPIC, inventoryUpdate.getOrderId(), inventoryUpdate));

}

}

}

private InventoryUpdate updateInventory(Order order) {

// Update inventory based on the order and return an InventoryUpdate object

}

}

Java Consumer: Shipping Service

The Shipping Service will consume messages from the inventory-updates topic and create shipping requests, publishing them to the shipping-requests topic:

public class ShippingService {

private static final String INVENTORY_UPDATES_TOPIC = "inventory-updates";

private static final String SHIPPING_REQUESTS_TOPIC = "shipping-requests";

private final KafkaConsumer<String, InventoryUpdate> consumer;

private final KafkaProducer<String, ShippingRequest> producer;

public ShippingService(Properties consumerProps, Properties producerProps) {

consumerProps.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

consumerProps.put("value.deserializer", "io.confluent.kafka.serializers.KafkaJsonDeserializer");

consumerProps.put("specific.avro.reader", "true");

this.consumer = new KafkaConsumer<>(consumerProps); this.consumer.subscribe(Collections.singletonList(INVENTORY_UPDATES_TOPIC));

producerProps.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

producerProps.put("value.serializer", "io.confluent.kafka.serializers.KafkaJsonSerializer");

this.producer = new KafkaProducer<>(producerProps);

}

public void run() {

while (true) {

ConsumerRecords<String, InventoryUpdate> records = consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, InventoryUpdate> record : records) {

ShippingRequest shippingRequest = createShippingRequest(record.value());

producer.send(new ProducerRecord<>(SHIPPING_REQUESTS_TOPIC, shippingRequest.getOrderId(), shippingRequest));

}

}

}

private ShippingRequest createShippingRequest(InventoryUpdate inventoryUpdate) {

// Create a ShippingRequest object based on the InventoryUpdate and return it

}

Running the Sample Project

Make sure Kafka is running and create the necessary topics using the

kafka-topics.shscript.Implement the

Order,InventoryUpdate, andShippingRequestclasses to represent the data model for the system.Configure the necessary properties for the Kafka producers and consumers.

Instantiate the

OrderService,InventoryService, andShippingServiceclasses and run them in your application.

By implementing this real-time order processing system using Kafka, we have demonstrated how Kafka can be utilized to create a decoupled, event-driven architecture. This sample project can be further extended to include additional functionality such as real-time order tracking and analytics.

Extending the Sample Project: Real-Time Order Tracking and Analytics

To further enhance our sample project, we can add real-time order tracking and analytics features. This would provide customers with order status updates and allow the e-commerce platform to gain insights into sales patterns, product popularity, and more.

Kafka Topics

We will create two additional topics to handle the new features:

order-status-updates: This topic will store order status updates to be sent to customers.order-analytics: This topic will store order data for analytical purposes.

Java Producer: Order Status Updates

We can modify the existing InventoryService and ShippingService classes to publish order status updates to the order-status-updates topic. For example, in the InventoryService class, we can add the following code after updating the inventory:

// Inside InventoryService class

private static final String ORDER_STATUS_UPDATES_TOPIC = "order-status-updates";

// ...

private void sendOrderStatusUpdate(InventoryUpdate inventoryUpdate) {

OrderStatusUpdate statusUpdate = new OrderStatusUpdate(inventoryUpdate.getOrderId(), "Inventory Updated");

producer.send(new ProducerRecord<>(ORDER_STATUS_UPDATES_TOPIC, statusUpdate.getOrderId(), statusUpdate));

}

// Inside run() method, after sending inventory update

sendOrderStatusUpdate(inventoryUpdate);

Similarly, add the corresponding code to the ShippingService class after creating a shipping request.

Java Consumer: Analytics Service

The Analytics Service will consume messages from the order-analytics topic and perform real-time analysis on the order data. Here's a sample implementation:

public class AnalyticsService {

private static final String ORDER_ANALYTICS_TOPIC = "order-analytics";

private final KafkaConsumer<String, Order> consumer;

public AnalyticsService(Properties consumerProps) {

consumerProps.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

consumerProps.put("value.deserializer", "io.confluent.kafka.serializers.KafkaJsonDeserializer");

consumerProps.put("specific.avro.reader", "true");

this.consumer = new KafkaConsumer<>(consumerProps);

this.consumer.subscribe(Collections.singletonList(ORDER_ANALYTICS_TOPIC));

}

public void run() {

while (true) {

ConsumerRecords<String, Order> records = consumer.poll(Duration.ofMillis(100));

for (ConsumerRecord<String, Order> record : records) {

processOrderAnalytics(record.value());

}

}

}

private void processOrderAnalytics(Order order) {

// Perform real-time analysis on the order data and update analytics dashboard or data store

}

}

Running the Extended Sample Project

Create additional topics using the

kafka-topics.shscript.Implement the

OrderStatusUpdateclass to represent the order status updates.Update the existing services to publish order status updates and order data to the new topics.

Configure the necessary properties for the Analytics Service's Kafka consumer.

Instantiate the

AnalyticsServiceclass and run it in your application.

By extending the sample project with real-time order tracking and analytics features, we have demonstrated how Kafka can be used to further enhance the capabilities of a microservices-based application. The decoupled, event-driven architecture facilitated by Kafka enables the seamless integration of new features and services, empowering developers to create scalable, robust, and high-performance applications.

Example: Kafka Connect and JDBC Sink Connector

To demonstrate the use of Kafka Connect, let's assume you want to store completed orders from the sample project in a relational database. We can use the Confluent JDBC Sink Connector to achieve this.

Download and install the Confluent JDBC Sink Connector from Confluent Hub.

Create a

connect-jdbc-sink.propertiesfile with the following configuration:name=JDBCSinkConnector connector.class=io.confluent.connect.jdbc.JdbcSinkConnector tasks.max=1 topics=completed-orders connection.url=jdbc:mysql://localhost:3306/orders_db?user=myuser&password=mypassword table.name.format=completed_orders key.converter=org.apache.kafka.connect.storage.StringConverter value.converter=io.confluent.connect.avro.AvroConverter value.converter.schema.registry.url=http://localhost:8081 insert.mode=insert auto.create=trueReplace the

connection.urlvalue with your database connection details.Start the Kafka Connect service by running the following command:

bin/connect-standalone.sh connect-standalone.properties connect-jdbc-sink.propertiesNow, when the sample project processes completed orders, the JDBC Sink Connector will automatically store them in the specified relational database table.

Conclusion

Kafka has emerged as a powerful tool for building scalable, fault-tolerant, and decoupled microservice architectures. In this comprehensive guide, we explored the fundamentals of Kafka, its role in a microservice architecture, installation, use cases, case studies, and industries that benefit from its adoption. We also provided examples using AWS and Java to help you get started with your own Kafka-based applications.

By understanding and implementing Kafka in your microservice architecture, you can unlock the potential of real-time data streaming, event-driven processing, and resilient communication between services. Whether you're just starting out or an experienced developer, Kafka offers a versatile and powerful solution for managing and processing large-scale data streams in modern applications.